RPA and AI Monsters (Based on Real Events)

- ukrsedo

- Sep 18, 2025

- 3 min read

Microsoft Automate Experience of Procurement Process Automation

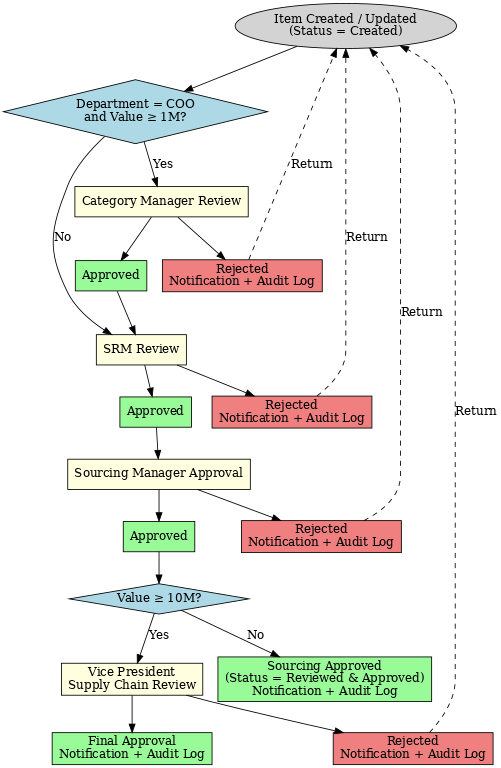

While I was advocating for the RPA being the basis of the procurement automation, I spent a couple of weeks on this process, which is just the multi-echelon review and approval of a sourcing strategy.

Here are some observations to share :

Complex solutions require complex licensing. You will have to combine the powers of Microsoft 365, SharePoint, Power Apps, premium connectors in Power Automate, AI builder, Copilot Studio and even more. Automation costs money and doesn't come easily.

AI is just a spice, not the food itself. I dare to repeat that automation is 20% Business Analysis (BA), 60% RPA, and only 20% AI, if not less. RPA is a skeleton which holds the process. Once it's in place, you may decide (or not) to spice it up with topical AI improvements.

My experience suggests that you start with the business need (BA), then create the process blueprint, and finally implement it with the GPT by your side. You test, simulate, fail, and debug endlessly.

And you constantly fight these two monsters, who take over the development most of the time.

Hallucination

AI hallucination refers to the tendency of artificial intelligence models, especially large language models, to generate content that sounds convincing but is factually incorrect, fabricated, or entirely unsupported by fundamental data.

These errors don’t come from intentional deception, but from the way AI predicts words and patterns based on training data, sometimes “filling gaps” with plausible yet false details.

In practical terms, hallucinations can mislead users if left unchecked, which is why governance, fact-checking, and human oversight are critical when applying AI in professional settings.

Misinformation and disinformation lead the short-term risks and may fuel instability and undermine trust in governance, complicating the urgent need for cooperation to address shared crises. (c) World Economic Forum.

You faced that many times already, and this problem is well-known, while there's another creature, much more complex and challenging to manage, primarily when you deal with AI for days, if not weeks, on the same topic.

Context Window

It is the maximum amount of text (tokens) an AI model can “remember” and process at once. Think of it as the AI’s short-term memory span.

The problem is twofold:

Forgetting earlier content – If a conversation, document, or dataset is longer than the context window, older parts are dropped or compressed. This means the AI may “lose track” of earlier details, causing inconsistency or errors in reasoning.

Hallucination risk – When the model lacks access to the full context, it fills gaps by generating plausible-sounding but ungrounded responses. This amplifies hallucinations, especially in long workflows, legal texts, or procurement scenarios where precision is critical.

For practical use, the context window determines the amount of data you can feed into the model at once. Larger windows (e.g., 200k+ tokens) improve continuity. However, they come at a higher computational cost and still don’t guarantee accuracy — the model might reference early details less reliably as the window grows.

If you think this monster isn't scary, you spend a few days with the GPT mulling the same topic, where you're the custodian and controller of the discussion subject, and your partner suffers from the cyclic memory loss.

Power Automate Structural Limitations

The icing on a cake is that Power Automate has very real structural limitations that often clash with complex workflows.

Nested conditions

Power Automate doesn’t have a strict, documented cap on nesting depth for conditions (IF / Switch / Apply to each), but performance and maintainability degrade rapidly beyond 7–8 levels.

Microsoft community and best-practice guides warn that deep nesting leads to:

Template validation errors (flow won’t save).

Run-time failures where downstream actions can’t “see” variables or outputs.

Debugging nightmares, since error messages often only reference the top scope.

What happens in practice

In real-world cases (like with my sourcing approvals), if you push past 7+ nested layers, you’ll hit errors like: “The template validation failed: action X cannot reference action Y because it is not in the runAfter path.”

This is effectively the platform’s way of saying “your nesting is too deep to guarantee execution order.”

Nevertheless, I encourage you to try. The joy of the working process is remarkable, and it's more intense the longer you have been working and the deeper you have drowned in desperation and disbelief on the midway.

Comments